Kubernetes is a container orchestration system. Kubernetes allows us to create containers on multiple servers (physical or virtual). It is done automatically by telling the Kubernetes how many container will be created based on specific image. Kubernetes takes care of the:

- Automatic deployment of the containerized applications across different servers.

- Distribution of the load across multiple server. This will avoid under/over utilization our servers.

- Auto-scaling of the deployed applications

- Monitoring and health check of the containers

- Replacement of the failed containers.

Kubernates supports some container runtimes:

- Docker

- CRI-O

- Containerd (pronounced: Container-dee)

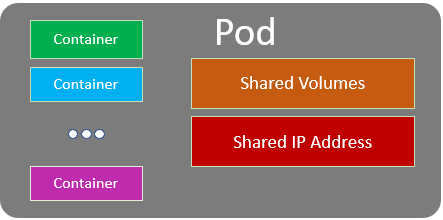

Pod

In Docker, Container is the smallest unit. Meanwhile in Kubernetes the smallest unit is Pod.

A pod can be contain one or more containers. There are also shared volumes and network resources (for example ip address) among containers in the same Pod.

In most common use case there is only a single container in a Pod. However sometime if some containers have to be tighten one another it can be serveral containers in a Pod. Please keep in mind that one Pod should be in one Server

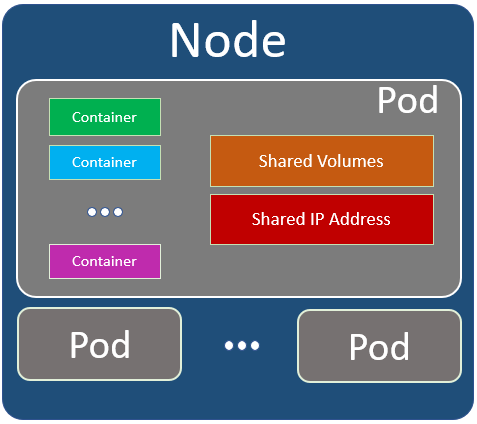

Node

A Node is a Server. It can contain one or more Pods.

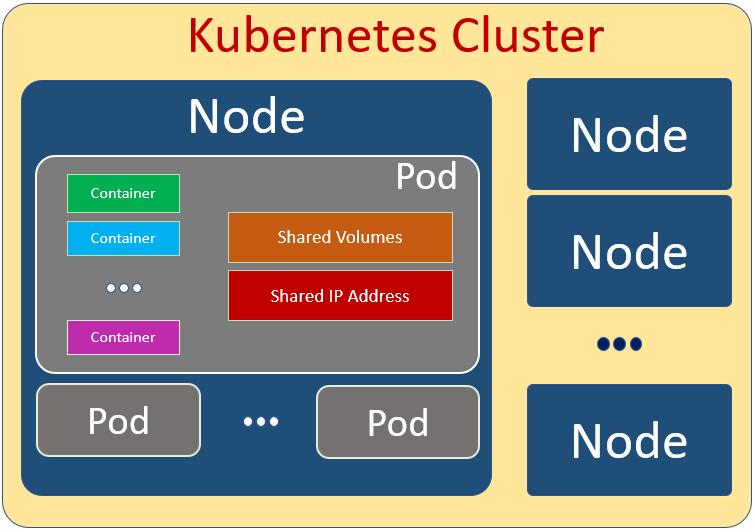

Kubernetes Cluster

Kubernetes Cluster contains serveral Nodes. The nodes can be located in different location. Usually the nodes in a Kubernetes Cluster close to each other in order to perform jobs more efficiently.

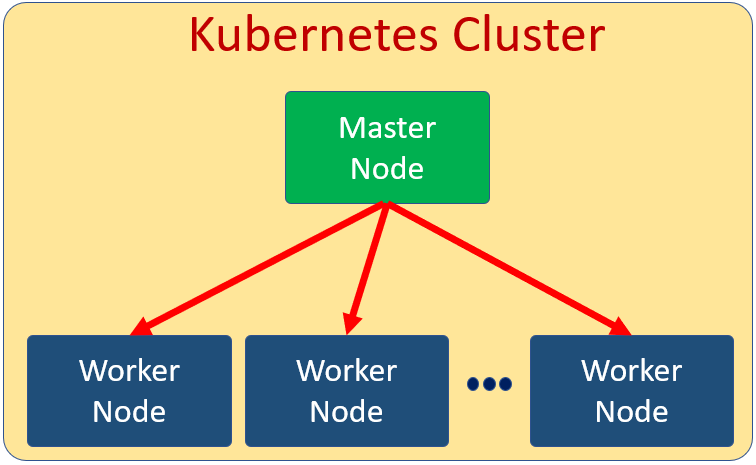

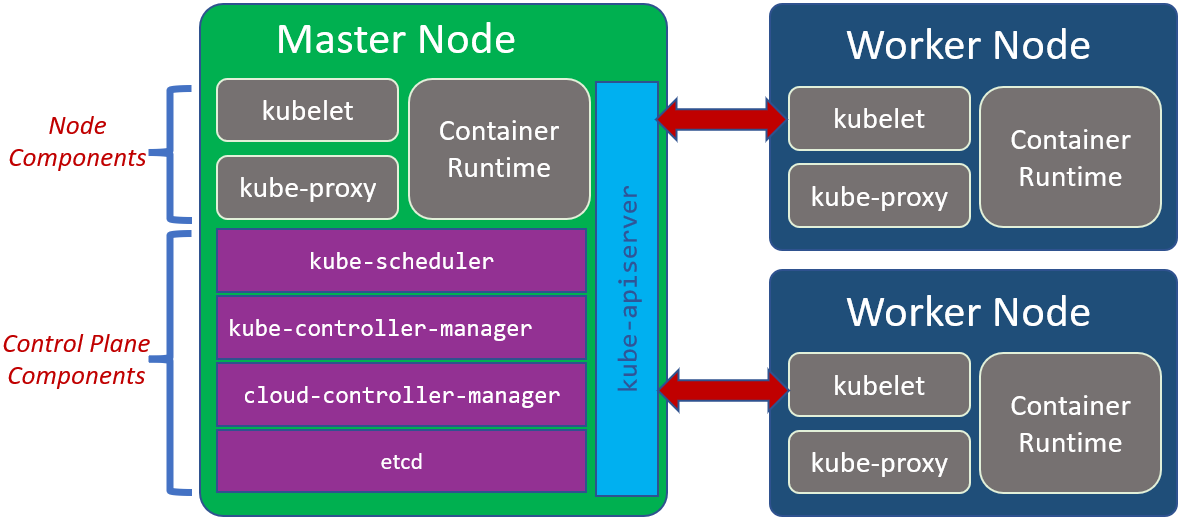

In Kubernetes Cluster there is a Master Node. Other Nodes in the cluster are called Worker Node.

The Master Node manages the Worker Nodes. It’s the Master Node jobs to distribute load across other Woker Nodes. All Pods related to our applications are deployed in the Worker Nodes. The Master Node runs only System Pods which are reponsible for the Kubernetes Cluster jobs in general. In short, the Master Node is the control of the Worker Nodes in Kubernetes Cluster and does not run our applications.

Every cluster has at least one worker node. In a Node there are several components or services. Roughly it’s shown in the following pictures.

Node Components:

Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment.

-

Contriner Runtime

Thecontainer runtimeis the software that is responsible for running containers. -

kubelet

Thekubeletis an agent that runs on each node in the cluster. It makes sure that containers are running in a Pod. -

kube-proxy

Thekube-proxyis a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service concept. It is reponsible for network communication inside on each Node and between Nodes

Control Plane Component

The control plane’s components make global decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events (for example, starting up a new pod when a deployment’s replicas field is unsatisfied).

-

kube-apiserver

Thekube-apiserveris a component of the Kubernetes control plane that exposes the Kubernetes API. The API Service is the main communication between different Node. -

kube-scheduler

Thekube-scheduleris responsible for planning and distribution load in the cluster. It watches for newly created Pods with no assigned node, and selects a node for them to run on. -

kube-controller-manager

Thekube-controller-managerruns controller processes. It controls each Node in the Cluster. -

cloud-controller-manager

Thecloud-controller-managerembeds cloud-specific control logic. The cloud controller manager lets you link your cluster into your cloud provider’s API. If we are running Kubernetes on our own premises, or in a learning environment inside our own PC, the cluster does not have a cloud controller manager. -

etcd

Theetcdstores all cluster data in key value format.